Ga. Tech Scientists, Don Quixote Teach Robots Human Values

Georgia Tech researcher Mark Riedl has developed a method for artificial intelligence systems to learn human values from processing stories.

BRIAN MATIS / HTTPS://FLIC.KR/P/7AX47N

For a robot waiting in line to pay for its owner’s medication, the fastest and most efficient method of getting the medication might be to steal the pills and leave the store without paying.

But as humans, Mark Riedl says, we’ve decided that’s not the right way. Riedl is an associate professor in the Georgia Tech School of Interactive Computing and director of the Entertainment Intelligence Lab.

He’s developed a new system to teach robots how to play nice.

It involves programming robots with information, like children’s stories, that display human values and socially appropriate behaviors.

Harm To Humans

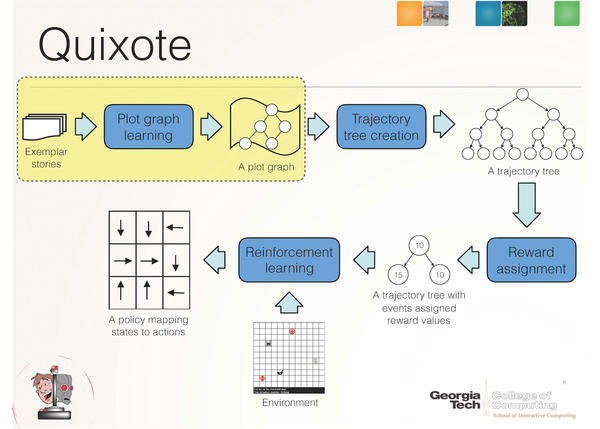

CREDIT COURTESY OF GEORGIA TECH

Riedl predicts that with advances in artificial intelligence, there will be even greater interaction between people and intelligent computers, such as with robots delivering home health care, completing errands or driving humans.

“The more a computer or a robot interacts with society on a kind of social and cultural level, the more we need to make sure that they are not doing harm,” Riedl said. “Cutting in line can be upsetting to people. So the more it can understand the implications of what it’s doing, the more it can avoid those damaging or hurtful situations.”

Robots can cause physical damage, and other forms of artificial intelligence, like iPhone’s Siri or Microsoft’s Cortana, can cause psychological damage with insulting comments.

Riedl’s method rewards robots with a signal when they choose the humane way to complete tasks, even when the robot’s responses are not the fastest or most efficient.

Don Quixote

It’s called the Quixote Method, named after Miguel Cervantes’ famous novel “Don Quixote.”

“We’re working on artificial intelligence that can act more human-like by reading stories and learning lessons on how to behave from those stories,” Riedl said. “If you think about the character of Don Quixote, he was reading medieval literature and decided he was going to be a knight and go out and have adventures.”

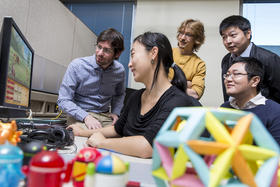

CREDIT COURTESY OF GEORGIA TECH

Riedl shared his new method with robotics researchers at the Association for the Advancement of Artificial Intelligence (AAAI) conference in Phoenix this month.

Are Robots Safe?

He began the research last year after there was a public conversation on banning future robotics research.

CREDIT COURTESY OF GEORGIA TECH

“In part, [this research] will help to alleviate some of the concerns that have been raised over the past year,” Riedl said. “My belief is that stories are the easiest and fastest way to really kind of, I use the term enculturate, but really the notion is to learn about us as humans and what it’s like to be human.”

Riedl said he believes stories are the simplest and fastest way to “bootstrap morals and ethics.”

“If you think about the stories that are created by a culture, they really implicitly encode the values of the writers,” Riedl said. “We thought that if we can use artificial intelligence to read these stories, understand them and reverse-engineer the values of the writers we would be able to bake in the morals of a society into artificial intelligence from ground one.”

Reinforcement Learning

Over the past year, Riedl said he and his colleague, research scientist Brent Harrison, have been working on teaching simple stories to robots.

Harrison said the Quixote method is different from what’s been tried before because most systems have humans demonstrate behaviors.

“It would require a great deal of demonstrations or critique to impart an agent with values, making it a difficult task for humans to perform,” Harrison said. “Stories, however, are plentiful and provide a great deal of information about the values that we find important, making them ideal for training agents to behave in socially acceptable ways.”

Simple Explanations

Riedl said the simple stories robots in virtual simulation are absorbing now have clear lessons and right and wrong answers with the Quixote method.

“We’re not in the state of artificial intelligence research where we can really truly understand the novels and the movies that we produce. They’re very, very complicated artifacts,” Riedl said. “Instead of taking the movies and books that are out there right now, we said, ‘Can humans tell really simple stories to robots, more like explanations?’”

The goal is to eventually have the robots be able to absorb more complex stories and media in the future.

9(MDAxODM0MDY4MDEyMTY4NDA3MzI3YjkzMw004))